Over the years, technology has integrated into people’s daily lives, and with that has come an increase in the use of artificial-intelligence systems. These systems, however, have been designed to learn from the vast amount of data produced within different societies, and as a result, they have led to many equity issues.

Take Boston, for example, a city riddled with potholes. In an effort to speed up the patching process, government officials released an app, StreetBump, so that people could report potholes to the city and help detect problem areas. While it seemed like a good idea at the time, an issue quickly arose as they realized that people in lower-income groups were less likely to have smartphones, and thus, access to the app. Consequently, the data lacked input from significant parts of the population — most notably from those who have the fewest resources.

Ethical issues such as the one above are becoming increasingly common in AI. Recognizing this, Dr. Afra Mashhadi, assistant professor at the University of Washington Bothell’s School of STEM, works to design solutions that can be used in policymaking and “ensures that they are inclusive of entire populations, not just certain subsets.”

Raising the bar

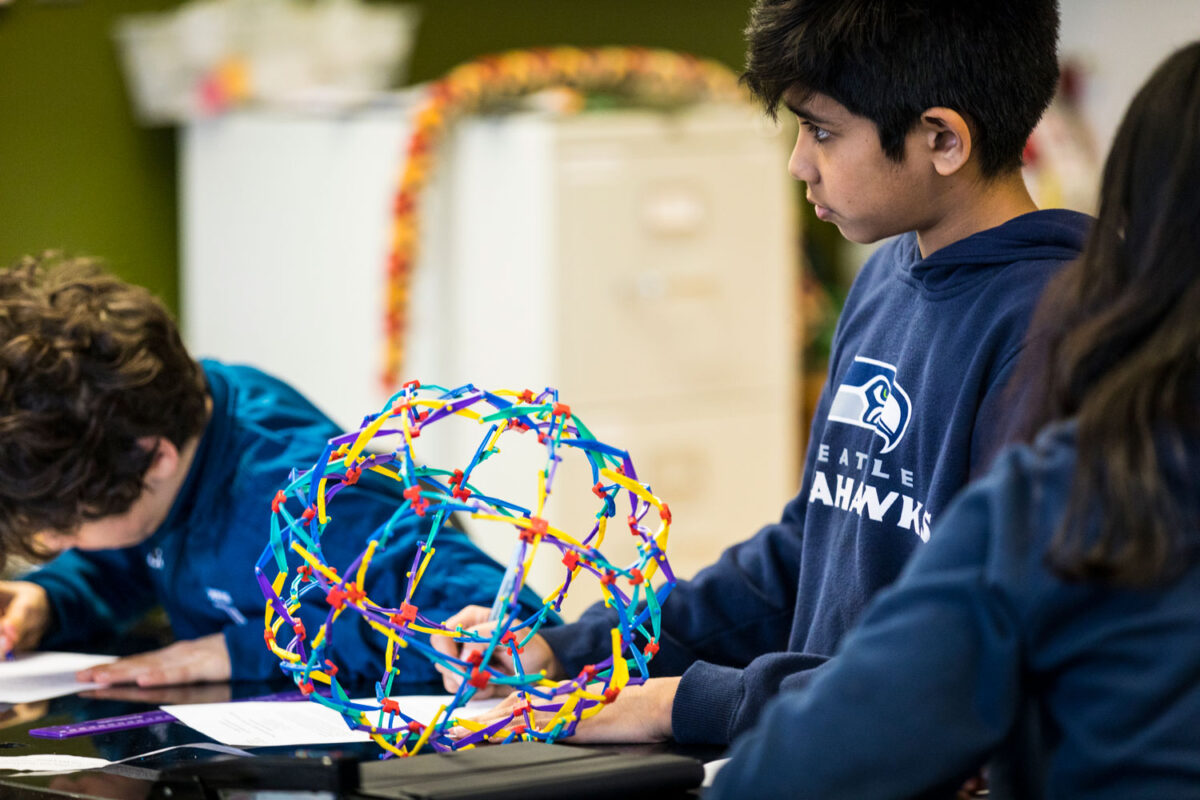

Mashhadi recognizes that it’s her students who will go on to design and implement future AI models, which is why she puts an emphasis on training “ethical, moral software engineers.” And while this is highly important, she said there is a tendency within the field to brush over what that means and how it translates into practice. “It’s often a 15-minute conversation point that occurs when going over a syllabus,” she said. “But that’s not good enough.”

In an effort to “RAISE” the bar, Mashhadi joined UW’s Responsibility in AI Systems & Experiences as a steering committee member. RAISE envisions a future where AI systems are built with ethical, value-centric and human-centric considerations — from day one.

“We are working together to educate and train a new generation of students and scholars from a broad and diverse set of disciplines who will play a crucial role in the future of AI systems and experiences,” she said.

One of these students is Michael Cho, a senior in the School of STEM. “I never realized how our prejudices and stereotypes are reflected in the technology that we design and build,” he said. “The software reinforces a lot of negative stereotypes and misconceptions that will be passed down to younger generations. My goal is to help illuminate these problems in technology and research potential solutions.”

He is now working with Mashhadi on new research paradigms in AI.

Ethics in AI

Mashhadi has also developed an entire course that empowers students to think critically about ethical boundaries, taking into account the social and political issues of machine learning. The class covers topics including implicit bias, privacy-related issues in artificial intelligence such as surveillance and the criteria associated with being a fair software engineer, among others.

Jay Quedado, another senior in STEM, took the course during the 2021 spring quarter and now serves as Mashhadi’s teaching assistant. “The class really opened my eyes,” she said. “Artificial intelligence systems are not adequately addressing issues of fairness or bias. More education on these topics is needed in the industry — and that starts in higher education.”

One of the topics Mashhadi covered, and that stood out most to Quedado, was recidivism, how likely a criminal is to reoffend. “Technology is used to gain insight on incarcerated individuals including their race, religion and socioeconomic status, and that then gets used to create statistics regarding how likely they are to recommit their crimes,” she said. “There are clear issues with fairness and biases in this method of collecting and analyzing data. It is just one of many examples of how we need to be better.”

By the end of the course, Mashhadi hopes her students understand that measuring fairness and accounting for biases in socio-technical systems is not a set of “magical methods” that can be applied to fix biases that are observed in AI. Rather, she said, we need “a thinking framework that will allow future computer scientists to examine the socio-technical challenges of current and future technologies.”

She will be presenting the outcome of her pedagogical findings from teaching this class at the University’s Teaching & Learning Symposium next month and also at the 2022 ACM CHI Conference on Human Factors in Computing Systems — the premier international conference of human-computer interaction presented by the Association of Computing Machinery Computer Human Interaction.

Data drives equity

While training ethical engineers to create the software of tomorrow, Mashhadi is also conducting research to help improve the software of today. One project involves determining how people’s relationships to parks changed over the past two years. Mashhadi and her research partner, Dr. Spencer Wood who is a senior research scientist with EarthLab in the UW’s College of the Environment, turned to Instagram to collect data.

Mashhadi explained that during the coronavirus pandemic, public parks became a lifeline as they were perhaps the only places that were able to maintain normalcy even when COVID-19 cases were surging. “The pandemic and the resulting economic recession have negatively affected many people’s physical, social and psychological health,” she said.

From their findings, they learned that parks and green spaces may have ameliorated the negative effects of the pandemic by “creating opportunities for outdoor recreation and nature exposure while other public activities and gatherings were restricted due to risk of disease transmissions.”

Their study, however, uncovered more than just visitation trends. It also revealed how biases in data collection can emerge. Reviewing the data from Instagram posts, they determined that the data did not represent the entire population because only certain subsets of people use the app or regularly post content. “Our analyses highlighted that while there is tremendous value in social media and mobility data, the research community does not have access to the volume of data that is necessary to fully understand the issue, such as the contextual information about the behavior of park visitors,” Mashhadi said.

“Having a more robust set of data would make it possible to appropriately leverage it for analyses that could inform management decisions.”

Forward thinking

Moving forward, Mashhadi hopes to contribute to this need by starting discussions in the field about how social-computing researchers can address the increasingly restricted access to social media data and innovation of alternative methodologies.

“I believe in the coming years we will start to see the emergence of heterogenous data sources that are less centralized and owned by big companies and that are instead generated and governed by people themselves,” she said.

“Of course, for that to happen,” Mashhadi said, “we need to increase public awareness about machine learning and privacy beyond scaring people with tabloids — and instead educate them to be part of the decision making.”